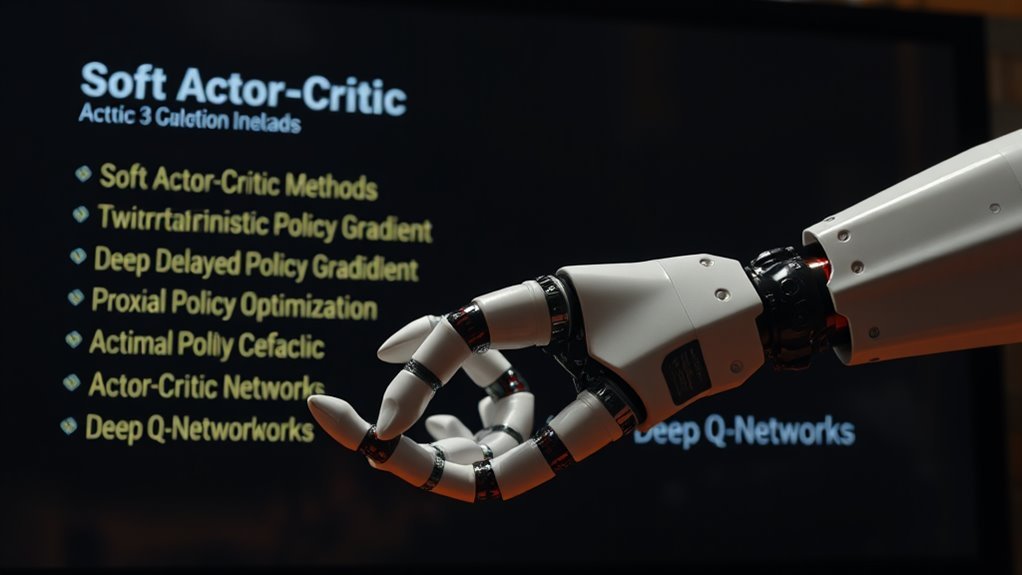

You're equipping your AI agents with reinforcement learning, and now you're choosing the right methods. You'll likely consider Deep Q-Networks, Policy Gradient Techniques, and Actor-Critic Methods. Proximal Policy Optimization and Soft Actor-Critic Algorithms are likewise top contenders. These methods drive decision-making in AI agents, each with unique strengths. You'll find that each method has its own applications and advantages, and exploring them further will help you determine which ones are best for your agents' specific needs.

Need-to-Knows

- Deep Q-Networks (DQN) utilize neural networks.

- Policy Gradient Techniques optimize policies directly.

- Actor-Critic Methods combine policy and value.

- Proximal Policy Optimization (PPO) ensures stable updates.

- Soft Actor-Critic Algorithms maximize expected rewards.

Deep Q-Networks Methods

You'll often find Deep Q-Networks (DQN) at the core of AI agents that operate in complex environments, and for good reason – they utilize a neural network to approximate the Q-value function, allowing you to make decisions based on raw pixel input.

This approach has been particularly effective in environments like Atari games, where DQNs have mastered several titles directly from screen pixels. To boost learning efficiency, DQNs employ techniques like experience replay, which stores past experiences in a replay buffer and samples them during training.

A target network is likewise used, providing consistent Q-value targets during training updates and reducing the risk of divergence.

As you investigate DQNs, you'll find variants like Double DQN and Dueling DQN, which address issues like overestimation bias and improve state value representation.

These advancements have notably improved the capabilities of reinforcement learning agents, demonstrating the power of Deep Q-Networks in complex environments.

Policy Gradient Techniques

Policy Gradient Techniques optimize an agent's policy directly by adjusting the parameters of the policy function based on the gradient of expected rewards, making them particularly effective for high-dimensional action spaces.

You'll find that these techniques are useful when dealing with complex action spaces. They work by sampling trajectories from the agent's current policy and using the observed rewards to compute the gradient, which is then used to update the policy parameters through gradient updates.

You can implement Policy Gradient methods using REINFORCE, which estimates gradients using Monte Carlo methods. Even though REINFORCE can be effective, it may not always provide reliable learning.

That's where variants like Proximal Policy Optimization come in, enhancing the standard approach by limiting policy updates for more stable learning.

Policy Gradient methods are especially useful in continuous action spaces, outperforming traditional value-based methods. By combining value function approximation with policy updates, you can create more effective learning systems, making Policy Gradient techniques a key tool in your reinforcement learning toolkit.

Actor Critic Methods

Someone approaching reinforcement learning will find Actor-Critic methods to be a powerful tool, as they combine two components: an "actor" that selects actions based on a policy and a "critic" that evaluates the chosen actions by estimating the value function.

You'll find that these methods permit more stable learning compared to traditional techniques. The actor updates the policy in the direction suggested by the critic, reducing variance in policy gradient estimates and improving convergence speed.

You can use variants like Advantage Actor-Critic, which uses the advantage function for policy updates, or Soft Actor-Critic, which incorporates entropy regularization to promote exploration in continuous action spaces. This encourages a stochastic policy, achieving superior performance.

Actor-Critic methods are widely used in applications like robotics and video games, where they've demonstrated success in training agents to perform complex tasks.

Proximal Policy Optimization

Proximal Policy Optimization (PPO) is a reinforcement learning algorithm that's gained widespread acceptance for its ability to optimize policies through stable and reliable updates.

You'll find that PPO is particularly effective in environments that require continuous action outputs, as it balances exploration and exploitation. This algorithm is designed to handle high-dimensional action spaces, making it suitable for complex tasks.

As you implement PPO, you'll notice it's a robust reinforcement learning algorithm that's easy to implement, with applications in robotics and gaming.

PPO achieves state-of-the-art performance in various benchmarks, including Atari games and continuous control tasks. Its ability to learn complex policies is owing to its clipped objective function, which restricts the change between the old and new policies, ensuring stable updates.

With PPO, you can efficiently optimize policies, and its stability makes it a great choice for many applications, delivering state-of-the-art performance in both robotics and gaming applications.

Soft Actor Critic Algorithms

You're now exploring Soft Actor Critic Algorithms, which offer a distinct approach to reinforcement learning.

The Soft Actor-Critic algorithm is an off-policy method that maximizes expected reward while encouraging exploration in continuous action spaces. This approach is particularly useful for AI agents that need to learn complex behaviors.

Some key features of Soft Actor-Critic Algorithms include:

- Utilizing two Q-function approximators and a policy network to stabilize learning

- Improving sample efficiency through a replay buffer

- Enabling effective exploration in continuous action spaces

- Achieving state-of-the-art performance in benchmark environments like OpenAI Gym

- Being applied in real-world robotics applications because of its robust nature

Most-Asked Questions FAQ

How Many Types of Reinforcement Learning Are There in AI?

You're exploring types of reinforcement learning, involving exploration strategies, reward signals, and policy gradients, which include model-based learning, off-policy methods, and on-policy learning, among other algorithms.

What Is an Example of Reinforcement Learning in AI?

You see reinforcement learning in AI through game playing, autonomous vehicles, and robotics training, which optimize tasks like financial trading and personalized recommendations.

What Is the Most Popular Reinforcement Learning Algorithm?

You'll find Deep Q networks, a Q learning technique, is the most popular, combining actor critic methods and temporal difference for effective exploration strategies and reward shaping in complex environments.

What Are the Four Different Types of Learning in AI?

You're exploring AI learning types, including supervised, unsupervised, semi-supervised, and self-supervised learning, which lay the groundwork for other methods like reinforcement learning.

Conclusion

You've investigated the top 5 reinforcement learning methods for AI agents, including Deep Q-Networks, Policy Gradient, Actor Critic, Proximal Policy Optimization, and Soft Actor Critic. You'll apply these methods to create smarter agents. You're now equipped to develop AI that learns from interactions and adapts to environments, making you a key player in AI development.