You'll start by understanding Q-Learning basics, then move to Deep Q Networks for complex environments. You'll likewise examine Policy Gradient Methods and Proximal Policy Optimization for balanced exploration and exploitation. Furthermore, you'll learn about Actor-Critic Methods, which combine policy-based and value-based approaches. As you implement these algorithms, you'll develop autonomous agents that learn from their environment. You're about to uncover how these key reinforcement learning algorithms can be applied to real-world problems, and the details will help you get started.

Need-to-Knows

- Implement Q-Learning for action-value estimation.

- Utilize Deep Q Networks for complex environments.

- Optimize policies with Policy Gradient Methods.

- Apply Proximal Policy Optimization for stable updates.

- Combine Actor-Critic Methods for enhanced performance.

Q Learning Basics

You're diving into Q-learning, a model-free reinforcement learning algorithm that helps agents learn the value of actions in specific states, represented as Q(s, a), which indicates the expected cumulative reward for taking action a in state s.

This algorithm is vital to balancing exploration vs exploitation, allowing you to reach educated conclusions. As you implement Q-learning, you'll notice it updates Q-values based on the immediate reward and the discounted future reward, which is essential for enhancing decision-making.

You'll need to evaluate the trade-off between exploration and exploitation, governed by a hyper-parameter that controls how often you choose random actions vs actions with high Q-values.

The reinforcement learning algorithm will help you learn ideal policies, even in environments with unknown dynamics. By focusing on future reward and using Q-learning, you can develop agents that reach conclusions based on expected cumulative rewards, making it suitable for applications like robotics and game playing.

Deep Q Networks

Deep Q Networks (DQN) kick in where traditional Q-learning leaves off, utilizing deep neural networks to approximate Q-values and tackle high-dimensional state spaces that are typical in complex environments like video games.

You'll find that DQN extends traditional Q-learning by using deep learning techniques, enabling you to handle complex reinforcement learning tasks. The architecture of DQN includes experience replay and a target network, which stabilize training by providing consistent target Q-values during updates.

As you implement DQN, you'll need to fine-tune hyperparameters such as the learning rate, discount factor, and the frequency of target network updates. These are critical for ensuring effective reinforcement learning and peak performance.

DQN has achieved landmark successes, surpassing human performance in various Atari 2600 games, highlighting its effectiveness in deep learning applications. By using DQN, you can tackle complex reinforcement learning tasks, making it a key algorithm in your toolkit.

You'll find that DQN is a powerful tool for reinforcement learning, allowing you to utilize deep learning techniques to achieve impressive results.

Policy Gradient Methods

Policy Gradient Methods offer a different approach to reinforcement learning, one that's particularly well-suited for high-dimensional action spaces. You'll find that these methods directly optimize the policy by adjusting the parameters in the direction of higher expected rewards. This makes them suitable for complex environments where value-based methods might struggle.

Policy gradient methods utilize the gradient of the expected reward function with respect to policy parameters, allowing you to learn stochastic policies that can effectively investigate the environment.

As you implement policy gradient methods, you'll notice that actor-critic methods are a popular variant. They combine both value-based and policy-based approaches, using an actor to propose actions and a critic to evaluate them. This improves learning stability and efficiency.

You'll additionally encounter challenges like high variance in gradient estimates and slower convergence. Nevertheless, techniques like baselines and variance reduction can help mitigate these issues, making policy gradient methods a powerful tool in reinforcement learning.

Proximal Policy Optimization

As you investigate Proximal Policy Optimization, it's clear that this algorithm offers a unique approach to balancing exploration and exploitation in reinforcement learning.

You'll find that PPO optimizes policies by preventing large updates, which improves stability during training. This is achieved through a clipped objective function that limits the change in policy updates, ensuring the new policy doesn't deviate considerably from the old one.

As you implement PPO, you'll notice it typically utilizes a mini-batch approach, where data from multiple episodes is used to update the policy. This allows for more efficient learning from experiences.

The agent learns to make decisions by interacting with the environment, and PPO's policy optimization technique allows it to adapt quickly.

In reinforcement learning, PPO has been successfully applied in various domains, including game playing and robotics.

You'll appreciate its simplicity in implementation and robust performance, making it a popular choice for policy optimization in reinforcement learning.

Actor Critic Methods

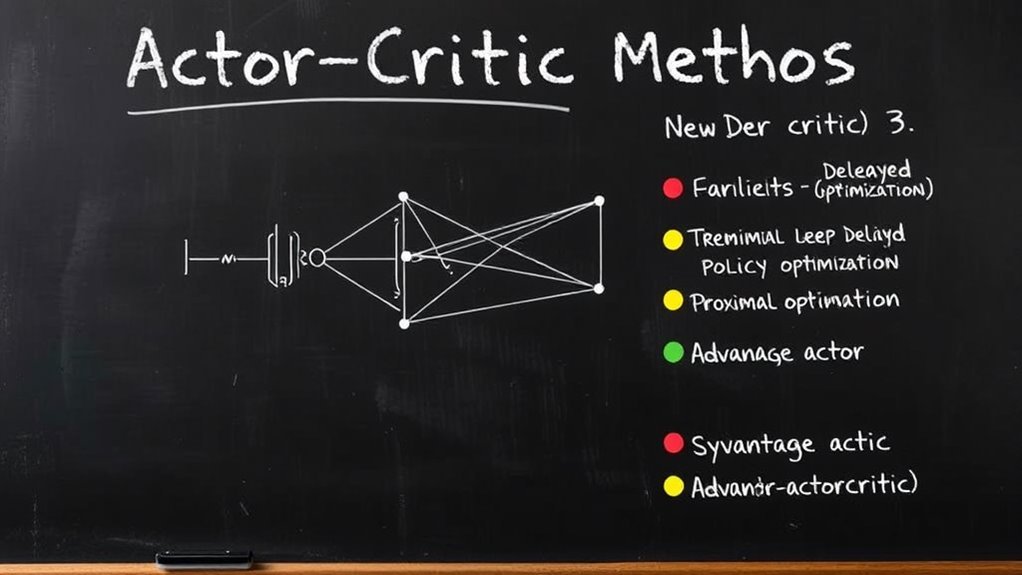

How do you combine the benefits of policy-based and value-based methods in reinforcement learning? You can achieve this by using actor-critic methods, which utilize the strengths of both approaches.

This method combines two components: the actor, which proposes actions based on the policy, and the critic, which evaluates the actions by estimating the value function. The actor updates the policy parameters in the direction suggested by the critic, leading to improved decision-making over time.

You'll find that actor-critic methods, such as Advantage Actor-Critic, can be improved using:

- Deep neural networks to approximate complex policies and value functions

- Policy gradient methods to update the actor's parameters

- Advanced optimization techniques to stabilize training and enhance performance

Most-Asked Questions FAQ

How to Implement Reinforcement Learning Algorithms?

You're implementing reinforcement learning algorithms, focusing on policy gradient methods, Q-learning variations, and deep reinforcement learning techniques to optimize decision-making in complex environments effectively.

How Many Ways Can You Implement Reinforcement Learning?

You can implement reinforcement learning in various ways, including Multi Agent Systems, Continuous Learning, and Real World Applications, don't you think you'll find multiple methods to apply it effectively?

What Are the Three Approaches to Implement a Reinforcement Learning Algorithm?

You'll use model-free, model-based, or policy gradient methods, incorporating techniques like exploration strategies and reward shaping to refine policy gradients, when implementing reinforcement learning algorithms effectively.

What Are the Main Reinforcement Learning Algorithms?

You're exploring Q learning techniques, Policy gradients, and Actor critic methods, which are key algorithms, to understand reinforcement learning basics and applications effectively.

Conclusion

You've now gained a solid understanding of key reinforcement learning algorithms. You'll implement Q Learning, Deep Q Networks, Policy Gradient Methods, Proximal Policy Optimization, and Actor Critic Methods to solve complex problems. You'll apply these concepts to real-world scenarios, refining your skills and boosting your knowledge in reinforcement learning.