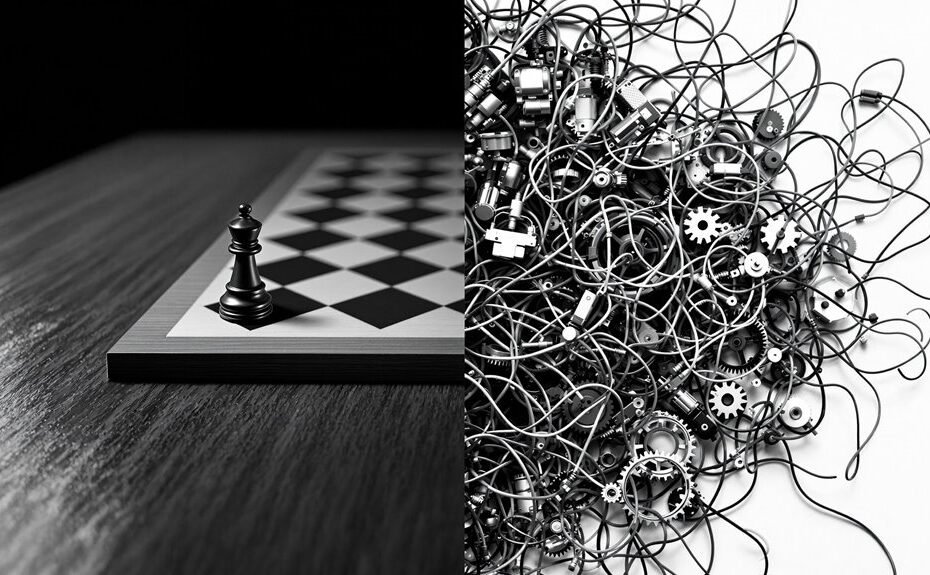

You're designing a reward function for your reinforcement learning agent, and you're torn between a simple or complex approach. Simple reward functions provide clear feedback, promoting faster learning and alignment, but may lead to slower learning because of limited feedback. Complex rewards, in contrast, offer more nuanced decision-making, but can introduce ambiguity and unintended behaviors if not designed carefully. As you weigh the pros and cons, keep in mind that finding the right balance between simplicity and complexity is vital for effective learning – and there's more to investigate on this important decision that can make or break your agent's success.

Need-to-Knows

- Simple reward functions provide clear feedback, promoting faster learning and alignment, whereas complex rewards may introduce ambiguity.

- Sparse rewards are suitable for tasks with clear end-goals, but can slow learning due to infrequent feedback, whereas dense rewards facilitate quicker learning.

- Complex rewards with shaped or intermediate feedback promote faster learning and decision-making, but may lead to unintended behaviors if poorly designed.

- Balancing complexity and simplicity in reward design is crucial, as overly complex rewards can exacerbate the credit assignment problem.

- Simple rewards may lead to slower learning, while complex rewards can result in faster learning, but require careful design to avoid unintended consequences.

Understanding Reward Functions

Delving into the world of reinforcement learning, you'll quickly realize that reward functions serve as a vital feedback mechanism, quantifying your agent's performance and guiding its behavior towards achieving specific goals.

As you design your reward function, you'll need to take into account the type of feedback you want to provide – sparse rewards that offer feedback only at the end of a task or dense rewards that provide frequent feedback throughout. The choice between these two will greatly impact your agent's learning efficiency.

Reward shaping can likewise improve learning by providing intermediate feedback and guiding your agent with heuristics or domain knowledge.

Nevertheless, the design of reward functions is essential, as poorly specified rewards can lead to unintended behaviors, known as reward hacking, where agents exploit loopholes in the system.

The complexity of your reward function will likewise dictate the exploration requirements for your agent, influencing the balance between immediate and long-term rewards in the learning process.

Types of Reward Functions

With a solid understanding of reward functions under your belt, it's time to investigate the various types that can be used to guide your agent's behavior.

You've got five main types to evaluate: sparse, dense, shaped, inverse, and composite.

Sparse reward functions provide feedback only when your agent achieves specific outcomes, making them suitable for tasks with clear end-goals like maze navigation.

Dense reward functions, conversely, offer frequent feedback based on intermediate progress towards goals, facilitating quicker learning and behavior refinement, as seen in robot arm manipulation.

Shaped reward functions incorporate additional hints about desired behavior through intermediate rewards, accelerating learning and guiding agents towards ideal actions.

Inverse reward functions focus on discouraging undesirable behaviors through penalties, often used in safety-critical applications like autonomous driving.

Finally, composite reward functions integrate multiple reward signals into a single function, allowing for nuanced decision-making in complex tasks.

Designing Effective Rewards

Now that you've investigated the various types of reward functions, it's clear that each has its strengths and weaknesses. When designing effective rewards, you need to strike a balance between simplicity and complexity.

Simple reward functions provide clear and immediate feedback, promoting faster learning and easier agent behavior alignment. Nevertheless, they mightn't capture the nuances of real-world tasks. Complex reward functions, in contrast, can accommodate multifaceted objectives but may lead to ambiguity and slower convergence.

To overcome these limitations, you can incorporate negative rewards for undesirable actions, enhancing specification and guiding agents away from harmful behaviors. Reward shaping techniques can likewise facilitate faster convergence by providing intermediate rewards that guide agents towards desired behaviors without overwhelming them with complexity.

Furthermore, consider using continuous and differentiable reward functions in complex environments, allowing for smoother learning changes and better handling of continuous state spaces. By carefully designing your reward function, you can create effective rewards that promote desired agent behavior and support a successful learning process.

Impact on Learning Outcomes

The learning outcomes of your reinforcement learning (RL) agent heavily rely on the design of the reward function. A well-crafted reward function can greatly impact your agent's behavior and decision-making.

Simple reward functions, like binary wins or losses, can lead to slower learning since they only provide feedback at the end of an episode. This makes it difficult for agents to associate actions with outcomes during the task.

Conversely, complex reward functions that incorporate shaped or intermediate rewards can accelerate learning by providing frequent feedback. This helps agents refine their behavior and improve decision-making throughout the task.

By using dense rewards in complex environments, you can promote faster convergence and more efficient exploration.

Nonetheless, poorly designed complex reward functions can lead to unintended behaviors or reward hacking, hindering learning outcomes.

It's essential to align your reward design with true task objectives to optimize your agent's behavior and achieve long-term rewards.

Balancing Simplicity and Complexity

You're faced with a delicate trade-off when designing a reward function: balancing simplicity and complexity. On one side, simple reward functions are easier to implement and understand, leading to quicker convergence in learning. Conversely, complex reward functions can capture nuanced behaviors but may require extensive tuning and testing to avoid unintended consequences.

| Reward Design Considerations | Impact on Agent Performance |

|---|---|

| Simplicity | Clear feedback, quicker learning, and reduced risk of reward hacking |

| Complexity | Captures nuanced behaviors, but may lead to unintended consequences and reduced learning efficiency |

| Balanced Approach | Optimizes learning efficiency, encourages exploration, and improves agent performance |

| Task Environment Consideration | Essential for designing rewards that align with desired outcomes and avoid confusion |

Popular RL Algorithms and Rewards

Designing effective reward functions becomes even more essential when considering popular reinforcement learning algorithms. You'll find that algorithms like Deep Q-Networks (DQN) rely on simple reward structures, often facing challenges because of sparse feedback until game completion.

Conversely, Proximal Policy Optimization (PPO) is well-suited for continuous control tasks and employs complex reward structures to allow fine-tuned policy adjustments during learning. AlphaGo and AlphaZero take it a step further, leveraging long-horizon tasks that necessitate agents to plan multiple steps ahead and strategically evaluate complex reward functions for best decision-making.

When it comes to reward design, you need to be mindful of the type of rewards you use. Sparse rewards can noticeably slow down learning processes, whereas dense rewards facilitate faster learning by providing continuous feedback throughout the task.

Careful design of reward functions is vital, as poorly structured rewards can lead to unintended behaviors and hinder overall agent performance. By understanding the intricacies of popular RL algorithms and rewards, you can create more effective reward functions that drive better learning outcomes and improve overall performance.

Real-World Applications and Examples

Beyond the theoretical domain, reinforcement learning's practical applications abound, with carefully crafted reward functions driving success in diverse fields.

You'll find reinforcement learning in action in various industries, including:

- Robotics, where complex rewards optimize for energy efficiency and time taken in tasks like box stacking.

- Video Gaming, where complex reward structures incentivize achieving multiple objectives, like completing a level with minimal damage taken.

- Autonomous Vehicles, where composite reward functions balance safe navigation with penalties for risky maneuvers.

In healthcare applications, complex rewards factor in patient outcomes and quality of life improvements, promoting more nuanced decision-making.

Meanwhile, in drone delivery systems, complex rewards consider speed, energy consumption, and obstacle avoidance to enhance overall performance.

Challenges in Reward Design

As you delve into the realm of reinforcement learning, you'll soon uncover that crafting effective reward functions is no easy feat. One of the primary challenges in reward design is striking a balance between complexity and simplicity. Overly complex rewards can confuse agents and hinder their learning, while simple rewards may not provide sufficient feedback.

Sparse rewards, which only provide feedback at terminal states, can exacerbate the credit assignment problem, slowing down the learning process because of infrequent feedback. Ensuring consistency and stability during training is likewise essential, as changes in reward structure can disrupt the learning trajectory of the agent.

Multi-objective scenarios further complicate reward design, as conflicting goals make it difficult to design rewards that adequately reflect the priorities of different task components. Poorly designed reward functions can lead to unintended behaviors or reward hacking, where agents exploit loopholes in the system, compromising the intended outcomes.

To overcome these challenges, you must carefully consider the reward structure and its implications on agent learning, ensuring that the reward function aligns with the desired outcomes of the reinforcement learning system.

Best Practices for Reward Functions

You've recognized the importance of overcoming the challenges in reward design, and now it's time to focus on crafting effective reward functions. A well-designed reward function is essential to achieving desired outcomes in reinforcement learning.

To get it right, follow these best practices:

- Clearly define desired outcomes: Avoid ambiguous rewards by ensuring that the agent understands what actions lead to success.

- Maintain consistent reward assignments: Promote stable learning by keeping rewards consistent over time.

- Balance investigation and exploitation: Structure rewards to encourage the agent to investigate new strategies while focusing on ideal actions.

Most-Asked Questions FAQ

What Are the Different Types of Reward Functions?

You'll encounter various reward functions, including sparse rewards for specific outcomes, dense rewards for incremental progress, shaping rewards for guided learning, and others like intrinsic, extrinsic, continuous, episodic, multi-objective rewards, which can be vulnerable to reward hacking and decay.

What Is the Reward Model in RL?

You're about to delve into the world of reward models in RL! It's an essential component that assigns numerical values to your agent's actions, guiding behavior towards ideal outcomes. You'll need to contemplate reward structure analysis, shaping techniques, and intrinsic motivations to overcome extrinsic factors and function challenges.

What Is the Reward Function in Deep Reinforcement Learning?

You design a reward function in deep reinforcement learning to quantify an agent's performance, using techniques like reward shaping, sparse rewards, and intrinsic motivation to guide actions toward desired outcomes, while considering reward noise, multi-objective rewards, and reward transparency.

What Is the Optimal Reward Function?

You'll find the best reward function by balancing immediate rewards with long-term objectives, using techniques like reward shaping, multi-objective rewards, and intrinsic motivation factors, while steering through sparse reward challenges and tuning the function to provide clear feedback and efficient learning.

Conclusion

As you wrap up your RL design, keep in mind that reward functions are essential to success. Simple rewards can facilitate quick learning, but complex rewards can capture nuances. Strike a balance between simplicity and complexity to effectively guide your agent's behavior. By understanding the impact of rewards on learning outcomes and following best practices, you'll be well on your way to designing effective RL systems that solve real-world problems.